Building Diphone

database

Although diphone-based

synthesizers are only one of a number of techniques

documented in FestVox, we believe they are, at present,

the most reliable and resource-effective method for

building new voices for general text-to-speech synthesizers.

A diphone here is two connected half-phones, where a

"phone" here may in fact be any segment including

a traditional phoneme, allophone or consonant cluster.

We carefully construct examples of each phone-phone

transition in our phoneset[appendix 2], so as to capture

all the implied sequential articulatory transitions,

even though some may not be phonotactically valid (like

[n:-ae]).

To fully exercise our techniques, we are collecting

one sets of diphones, at 32KHz,from a single speaker

of Bangla (IAR) with varying speaking rate. These databases

are being released publicly with an open license, so

that anyone who wishes can replicate our findings, study

the voice, teach about synthesis, or build their own

by comparison.

Diphone Synthesis

Diphone synthesis is one of the most popular methods

used for creating a synthetic voice from recordings

or samples of a particular person; it can capture a

good deal of the acoustic quality of an individual,

within some limits. The rationale for using a diphone,

which is two adjacent half-phones, is that the ``center''

of a phonetic realization is the most stable region,

whereas the transition from one ``segment'' to another

contains the most interesting phenomena, and thus the

hardest to model. The diphone, then, cuts the units

at the points of relative stability, rather than at

the volatile phone-phone transition, where so-called

coarticulatory effects appear.

There is clearly a simplifying assumption: that all

relevant phonetic realizations can be enumerated, and

that by simply collecting all of phone-phone transitions,

that any possible sequence of speech sounds in the target

language could be produced. Thus, with a 44-phone inventory,

one could collect a 44 * 44 = 1936 diphone inventory

and create a synthesizer that could speak anything,

given the imposition of appropriate prosody - intonation,

duration, and shift in spectral quality, as determined

by other modules in a general-purpose synthesizer.

For Bangla we had 2108 nonsense words generated by our

script and some of the non sense words contain invalid

combination so we manually excluded them. Some changes

in the Bd_schema.scm can result in better diphone list.

After hand correction we had 1410 nonsense words which

contained 2820 diphones for bangla. Using this diphone

list any speech can be produced including consonant

clusters.

Collecting a Diphone Set

Building a diphone synthesizer involves several steps:

• Choosing the phone set

• Designing carrier material

• Generating the prompts

• Preparing the audio collection environment

• Collecting the data

• Segmentation/Alignment

• Quality control

• Extracting pitchmarks and pitch-synchronous parameters

• Diphone index

• Amalgamation

Choosing the phone set

One should consider the phone set carefully for synthesis.

For Bangla there were no phoneset defined so we had

to make for our own purpose. Many may not agree with

the phoneset but it served us well for our diphone generation

and for other modules. The main objective of building

a phoneset for a language is to define all the phonemes

and other sound for the festival system.

Designing carrier material

We use nonsense carrier words to collect all possible

diphones, following [5]. Others have successfully used

natural carrier phrases, but the argued advantage of

natural delivery offers may also be a disadvantage as

people may assume too much, and fail to produce exactly

the desired phones. Within this framework, an experiment

may be carried out to compare the results of voices

made from nonsense words to one made from naturalistic

text, but we know of none having been performed as of

yet.

It should be noted that delivering diphones is not a

particularly natural endeavor. As these phone segments

will be extracted both for pitch and duration, it is

important that their delivery be consistent, so that

joins are more likely to be acceptable.

We believe that the use of nonsense carrier material

makes the delivery of the diphones more consistent.

Also, the pronunciation of a phonetic string is more

clearly defined in these nonsense words than in elicited

natural words. We generate carrier phrases so that,

where possible, we can extract the diphones from the

middle of a word. As it takes time for the human articulation

system to start, we do not want to extract diphones

from syllables at the start or end of words, unless

these transitions to or into silence (SIL) are part

of the diphone in question.

for example, from

# t aa k a k aa #

we would extract the diphones [k-a] and [a-k], and from

# t aa kh a kh aa #

we would get [kh-a] and [a-kh] from the prompt.

For each class in the language, consonant-vowel, vowel-consonant,

vowel-vowel, consonant-consonant, silence-phone, phone-silence,

and any other special sets like syllabic, consonant

clusters and allophones we build simple carrier sets

and loop through all possible values generating a long

list of strings of phones which contain all possible

diphones in the language to be recorded. Basic scripts

are provide for this that can be adapted for other language,

while specific scripts are provided for currently supported

languages.

Generating the

prompts

Once the phone set and the sort of carrier material

for each type has been chosen, the prompt list is produced

automatically from the specification using a set of

templates. Consonant-vowel and vowel-consonant pairs

are generally kept together, as shown in the example

above - [k-a] and [a-k] are generated in a single prompt.

Special contexts are also created for e.g. vowel-vowel

diphones, and for transitions to and from silences into

a phone.

We then synthesize these prompts using an existing synthesizer.

The prompts are generated for a number of reasons: first,

to play to the user while recording. Even highly trained

phoneticians make mistakes in reading 2000 prompts so

the synthesized prompts help guide the speaker to say

the right thing. Secondly, as we generate these prompts

at constant pitch and duration, this encourages the

speaker to do likewise. As we are going to modify the

pitch and duration independently, it is better if the

recording is in a monotone, and consistent. This, we

feel, is best done by effectively having the nonsense

word delivered in what almost sounds like a chant.

The second reason for generating prompts is their use

in labelling the spoken word which is described below.

Preparing the

audio collection environment

We collected the diphones at home and try to make it

as much clear and noiseless. For collecting voice data

we used several software. We collected the diphones

at Windows Xp environment as the sound editing facility

is better than Linux there. For sound collection the

default XP sound recorder can be used but for better

quality we used Sourge Forge. With Sourge Forge the

effect of the environment can be minimized so that the

noise is reduced. With Sourge Forge we had to do the

following –

1. Monitor Input level

2. Choose an input device and adjust levels for that.

3. Adjust for DC offset

4. Preparing for recording

5. Review recorded intakes.

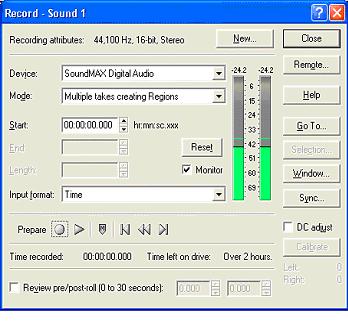

Fig. Adjusting audio recording input level.

After the recording of the nonsense words its worth

to make the voice levels of all files at the same level

as voice level may differ from file to file. This helps

to keep the consistency of the voice quality of the

synthesizer otherwise there will be a level factor error

at the synthesized speech output. For leveling the audio

signals we used the Wave Hammer tool of Sourge Forge.

We choose “Level at 6 db and maximize” option to level

our audio files.

Collecting the data

For collecting data

we used the sourge forge editor. Using the magnifying

tool the diphone segments can be extracted perfectly

so that no silence part are included in the segments.

Otherwise that could rduce te quality of diphone database.

Fig. Wave hammer for

leveling audio signals.

next>>

|